From early in his career as a cognitive scientist, Morten H. Christiansen, the William R. Kenan, Jr. Professor of Psychology in the College of Arts and Sciences (A&S), was skeptical of the prevailing academic view that biology governed humans’ remarkable facility for language.

Over the second half of the 20th century, scholars had advanced theories that our brains are hardwired with a “universal grammar,” or that we had evolved a special language instinct. Communication was widely seen in engineering terms, as the computer-like transfer of information.

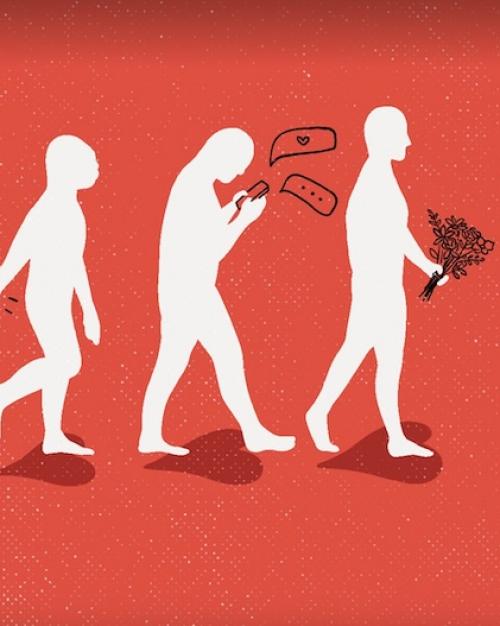

Now in a new book written for a popular audience, “The Language Game: How Improvisation Created Language and Changed the World,” Christiansen and longtime collaborator Nick Chater, a professor at Warwick University, say it’s time for a radical reassessment of that orthodoxy.

Language, they argue, is a product of cultural, not biological, evolution. It is not bound by universal rules and fixed meanings but flexible, developed through a continual flow of creative improvisation – a process they compare to a game of charades.

Christiansen, recently elected a foreign member of the Royal Danish Academy of Sciences and Letters and the recipient of an A&S New Frontier Grant, spoke to the Chronicle about the book.

Question: Why is charades, a game in which spoken language isn’t allowed, a good metaphor for how language works?

Answer: When you’re playing charades, you have to improvise all the time. And we’re doing so in language as well. The words, phrases and sentences we produce are just the tip of what we call the communication iceberg. It’s the submerged part – all the social and cultural knowledge, our ability to understand each other more generally – that really has made language possible and that makes language special. In charades, we’re not just relying only on the gestures someone is making, but on what we know about them and about the world, and all that information helps us interpret what these wild gesticulations actually mean. The person doing the acting also must take into account what they know about you. So it’s a collaborative effort, and language is fundamentally collaborative.

Q: How does that view of language differ from the one you consider outdated?

A: The notion of language as information transfer is still quite dominant in the language sciences. Language is treated as occurring between senders and receivers, and the receivers are passively waiting to get the message and then unpack it using the same mechanism in reverse that was used to encode it. We suggest a very different process. You’re not waiting passively to unpack what I’m saying, you’re actively engaging with the individual words. And the words we produce are just clues. What brings out the meaning is all these other collaborative processes, like gestures in charades.

Q: You described words as just clues, but don’t they have very specific meanings that we can look up in dictionaries?

A: We often tend to think that words have fixed meanings, but once we look closer, it turns out their meaning is all over the place. Think of the word “light,” which means many different things in many different situations – a light we see, or light beer, or light infantry. Words get their meaning from the context in which we use them. This goes against the traditional way of looking at meaning as fixed packets of information. Now, another aspect of charades is that we can reuse gestures, and as we do that more often with the same people, they can become shortened or change meanings. So even though language is this messy concoction of improvisation, some conventionalized structures can evolve over time. This is how we get grammar.

Q: People increasingly are talking to computers, like Alexa and Siri. Do you share concerns about the danger artificial intelligence poses as it grows more advanced and fluent?

A: Our epilogue is about how language will save us from the “singularity” – the hypothesized time when computers become smarter than us and take over the world. Computers can do all sorts of cool stuff with language these days, but they do that based on having sifted through billions of text documents. They don’t actually understand anything in the sense that you and I understand language. Like apes, they don’t play charades. The way people have been developing AI systems is very different from the way human language works. And thus, we’re not as concerned as some in that regard. I think we’re very far off from a generalized intelligence.

Q. That’s reassuring – but where does that leave us?

A: We wanted to provide an alternative, comprehensive and – we think – exciting account of how language works, one that adds nuance to what it means to be human. It gives us a better way to understand this amazing communicative ability that allows us to pass on knowledge from one generation to the next, that allows us to develop culture, technologies and societies. Underlying all of this is our common humanity – this is to say that human language is fundamentally collaborative. So in that sense we want to leave our readers with a positive outlook on language and the human condition.